Analysing the Seeds data in INLA with a GAM/GLMM

Haakon Bakka

BTopic102 updated 10 Sept 2018

1 About

This is a detailed example of a good model for a simple dataset called Seeds. The goal is to show an example of INLA with a simple model, using both fixed effects and random effects. For an overview of the words and concepts see the word-list. The name BTopic102 is a unique identifier for the webpage.

1.1 Initialise R session

We load any packages and set global options. You may need to install these libraries (Installation and general troubleshooting).

library(INLA)2 Data on plant seeds

Here we load data, rename, rescale.

data(Seeds)This Seeds is the original dataframe. We will keep

Seeds unchanged, and create another object

(df, our modeling dataframe) later. Next we explain the

data.

# Run: ?Seeds

head(Seeds)## r n x1 x2 plate

## 1 10 39 0 0 1

## 2 23 62 0 0 2

## 3 23 81 0 0 3

## 4 26 51 0 0 4

## 5 17 39 0 0 5

## 6 5 6 0 1 6# - r is the number of seed germinated (successes)

# - n is the number of seeds attempted (trials)

# - x1 is the type of seed

# - x2 is the type of root extract

# - plate is the numbering of the plates/experimentsAll the covariates are 0/1 factors, and the numbering of the plates are arbitrary. We do not re-scale any covariates. The observations are integers, so we do not re-scale these either.

df = data.frame(y = Seeds$r, Ntrials = Seeds$n, Seeds[, 3:5])I always name the dataframe that is going to be used in the inference

to df, keeping the original dataframe. The observations are

always named \(y\).

2.1 Explore data

summary(df)## y Ntrials x1 x2 plate

## Min. : 0 Min. : 4 Min. :0.00 Min. :0.00 Min. : 1

## 1st Qu.: 8 1st Qu.:16 1st Qu.:0.00 1st Qu.:0.00 1st Qu.: 6

## Median :17 Median :39 Median :0.00 Median :1.00 Median :11

## Mean :20 Mean :40 Mean :0.48 Mean :0.52 Mean :11

## 3rd Qu.:26 3rd Qu.:51 3rd Qu.:1.00 3rd Qu.:1.00 3rd Qu.:16

## Max. :55 Max. :81 Max. :1.00 Max. :1.00 Max. :21table(df$x1)##

## 0 1

## 11 10table(df$x2)##

## 0 1

## 10 11This shows that no other covariates or factors are out of scale, or senseless. We note that there are several observations of each factor level.

Next we look at counfounding and outliers.

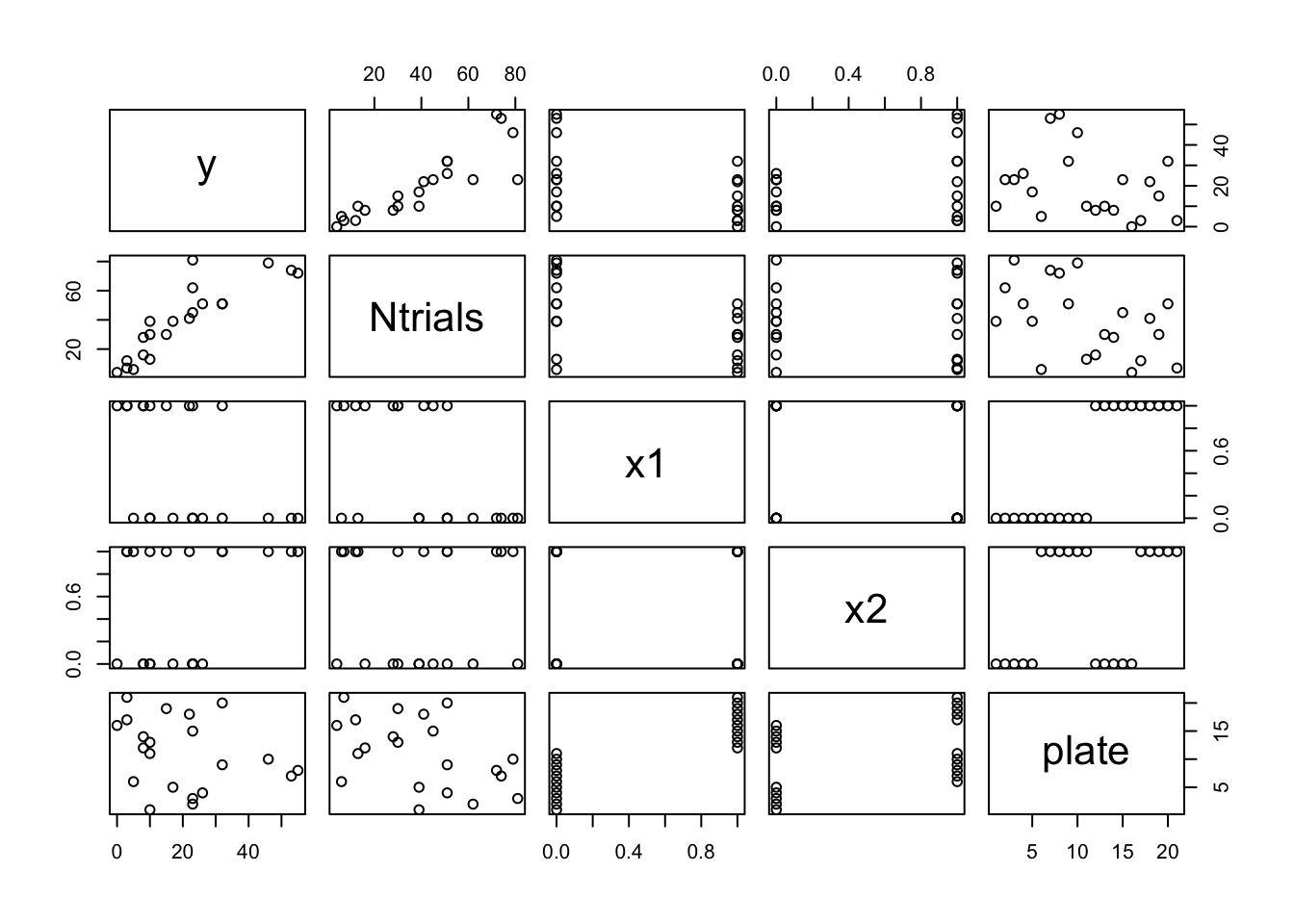

plot(df)

We see no problematic structure, or outliers. The relationship

between x1 and plate is not a problem (since

plate values will be treated as an arbitrary enumeration in

our model).

3 Observation Likelihood

family1 = "binomial"

control.family1 = list(control.link=list(model="logit"))

# number of trials is df$NtrialsThis specifies which likelihood we are going to use for the

observations. The binomial distribution is defined with a certain number

of trials, in INLA known as Ntrials. If there were

hyper-parameters in our likelihood, we would specify the priors on these

in control.family.

3.1 Mathematical description

The precise mathematical description of our likelihood is, \[y_i \sim \text{Binom}(N_i, p_i) \] with \[\eta_i = \text{logit}(p_i). \] We call \(\eta_i\) (“eta i”) the predictor (or: linear predictor, latent variable, hidden state, …). This \(\eta_i\) is a parameter where we believe a linear model to be somewhat sensible. (A linear model is completely senseless on \(p_i\).)

4 Formula

hyper1 = list(theta = list(prior="pc.prec", param=c(1,0.01)))

formula1 = y ~ x1 + x2 + f(plate, model="iid", hyper=hyper1)This specifies the formula, and the priors for any hyper-parameters

in the random effects. See inla.doc("pc.prec") for more

details on this prior.

4.1 Mathematical description

The precise mathematical description of the model for our predictor \(\eta_i\) is \[\eta_i = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + v_i, \] where \[\beta_i \sim \mathcal N(0, 1000), \] \[v_i \sim \mathcal N(0, \sigma_v^2). \]

This is not yet a Bayesian model, since we have not defined the prior distribution (“simulating distribution”) for all parameters. We assume an exponential prior on \(v_i\), i.e. \[\pi(\sigma_v) = \lambda e^{-\lambda \sigma_v}. \] This is not yet a Bayesian model, as we have not defined a prior for \(\lambda\). We fix \(\lambda\) so that \[\pi(\sigma_v > 1) = 0.01, \] which means that \(\lambda = \frac{-log(0.01)}{1} \approx 4.6\).

Now we have a fully specified Bayesian model. After this, everything else is “just computations”, and then interpreting the results.

5 Call INLA

Next we run the inla-call, where we collect the variables we have defined.

res1 = inla(formula=formula1, data=df,

family=family1, Ntrials=Ntrials,

control.family=control.family1)The Ntrials picks up the correct column in the

dataframe.

6 Look at results

summary(res1)##

## Call:

## c("inla.core(formula = formula, family = family, contrasts =

## contrasts, ", " data = data, quantiles = quantiles, E = E,

## offset = offset, ", " scale = scale, weights = weights,

## Ntrials = Ntrials, strata = strata, ", " lp.scale = lp.scale,

## link.covariates = link.covariates, verbose = verbose, ", "

## lincomb = lincomb, selection = selection, control.compute =

## control.compute, ", " control.predictor = control.predictor,

## control.family = control.family, ", " control.inla =

## control.inla, control.fixed = control.fixed, ", " control.mode

## = control.mode, control.expert = control.expert, ", "

## control.hazard = control.hazard, control.lincomb =

## control.lincomb, ", " control.update = control.update,

## control.lp.scale = control.lp.scale, ", " control.pardiso =

## control.pardiso, only.hyperparam = only.hyperparam, ", "

## inla.call = inla.call, inla.arg = inla.arg, num.threads =

## num.threads, ", " blas.num.threads = blas.num.threads, keep =

## keep, working.directory = working.directory, ", " silent =

## silent, inla.mode = inla.mode, safe = FALSE, debug = debug, ",

## " .parent.frame = .parent.frame)")

## Time used:

## Pre = 3.34, Running = 6.01, Post = 0.025, Total = 9.38

## Fixed effects:

## mean sd 0.025quant 0.5quant 0.97quant mode kld

## (Intercept) -0.39 0.18 -0.74 -0.39 -0.031 -0.40 0

## x1 -0.36 0.23 -0.84 -0.35 0.059 -0.34 0

## x2 1.03 0.22 0.59 1.03 1.452 1.04 0

##

## Random effects:

## Name Model

## plate IID model

##

## Model hyperparameters:

## mean sd 0.025quant 0.5quant 0.97quant mode

## Precision for plate 21.39 41.32 2.96 10.62 88.69 6.06

##

## Marginal log-Likelihood: -68.44

## is computed

## Posterior summaries for the linear predictor and the fitted values are computed

## (Posterior marginals needs also 'control.compute=list(return.marginals.predictor=TRUE)')This summary shows many elements of the results, notably not including the random effects (the parameters are not shown). The total result, however, is a complex high-dimensional posterior.

# Run: str(res1, 1)This command, if run, shows one step in the list-of-lists hierarchy that is the inla result.

7 Look at the random effect

res1$summary.random$plate## ID mean sd 0.025quant 0.5quant 0.97quant mode kld

## 1 1 -0.309 0.28 -0.927 -0.282 0.150 -0.2148 9.7e-07

## 2 2 -0.087 0.23 -0.578 -0.076 0.342 -0.0412 4.0e-08

## 3 3 -0.333 0.25 -0.869 -0.315 0.081 -0.2778 2.7e-07

## 4 4 0.227 0.25 -0.221 0.209 0.729 0.1572 2.5e-07

## 5 5 0.056 0.25 -0.436 0.050 0.543 0.0295 2.1e-08

## 6 6 0.111 0.33 -0.507 0.086 0.790 0.0324 2.5e-06

## 7 7 0.167 0.23 -0.272 0.153 0.637 0.1036 1.1e-07

## 8 8 0.300 0.25 -0.139 0.281 0.812 0.2401 3.0e-07

## 9 9 -0.063 0.24 -0.564 -0.055 0.385 -0.0297 2.2e-08

## 10 10 -0.195 0.23 -0.687 -0.180 0.209 -0.1316 1.9e-07

## 11 11 0.128 0.30 -0.441 0.104 0.757 0.0440 4.2e-07

## 12 12 0.222 0.31 -0.317 0.188 0.877 0.0850 1.1e-06

## 13 13 0.021 0.27 -0.511 0.016 0.549 0.0051 2.6e-09

## 14 14 -0.065 0.27 -0.630 -0.056 0.447 -0.0288 1.9e-08

## 15 15 0.410 0.30 -0.081 0.384 1.023 0.3320 4.1e-07

## 16 16 -0.141 0.34 -0.906 -0.109 0.458 -0.0399 9.1e-06

## 17 17 -0.316 0.33 -1.071 -0.274 0.215 -0.1383 2.5e-06

## 18 18 -0.060 0.25 -0.572 -0.055 0.419 -0.0349 1.6e-08

## 19 19 -0.114 0.26 -0.668 -0.101 0.372 -0.0560 5.7e-08

## 20 20 0.135 0.25 -0.332 0.117 0.651 0.0603 1.4e-07

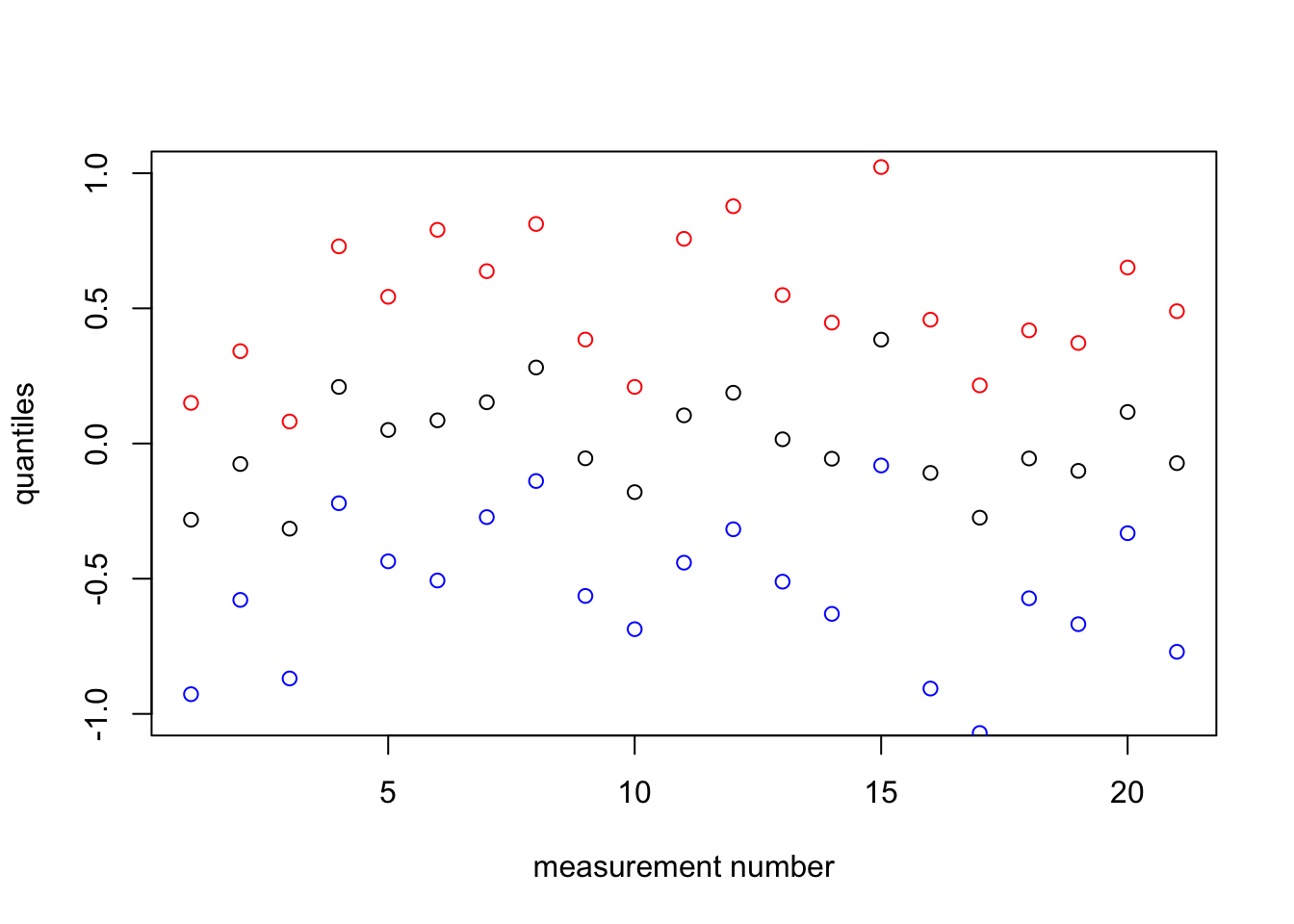

## 21 21 -0.091 0.32 -0.770 -0.073 0.489 -0.0281 5.6e-078 Plot the random effect quantiles

plot(1:nrow(df), res1$summary.random$plate$`0.97`, col="red", ylim=c(-1,1),

xlab="measurement number", ylab = "quantiles")

points(1:nrow(df), res1$summary.random$plate$`0.5quant`)

points(1:nrow(df), res1$summary.random$plate$`0.02`, col="blue")

The reason we use points instead of lines is that the numbering of the plates / experiments is arbitrary. Lines would falsely imply that one observation came “before/after” another (in time or on some other covariate).

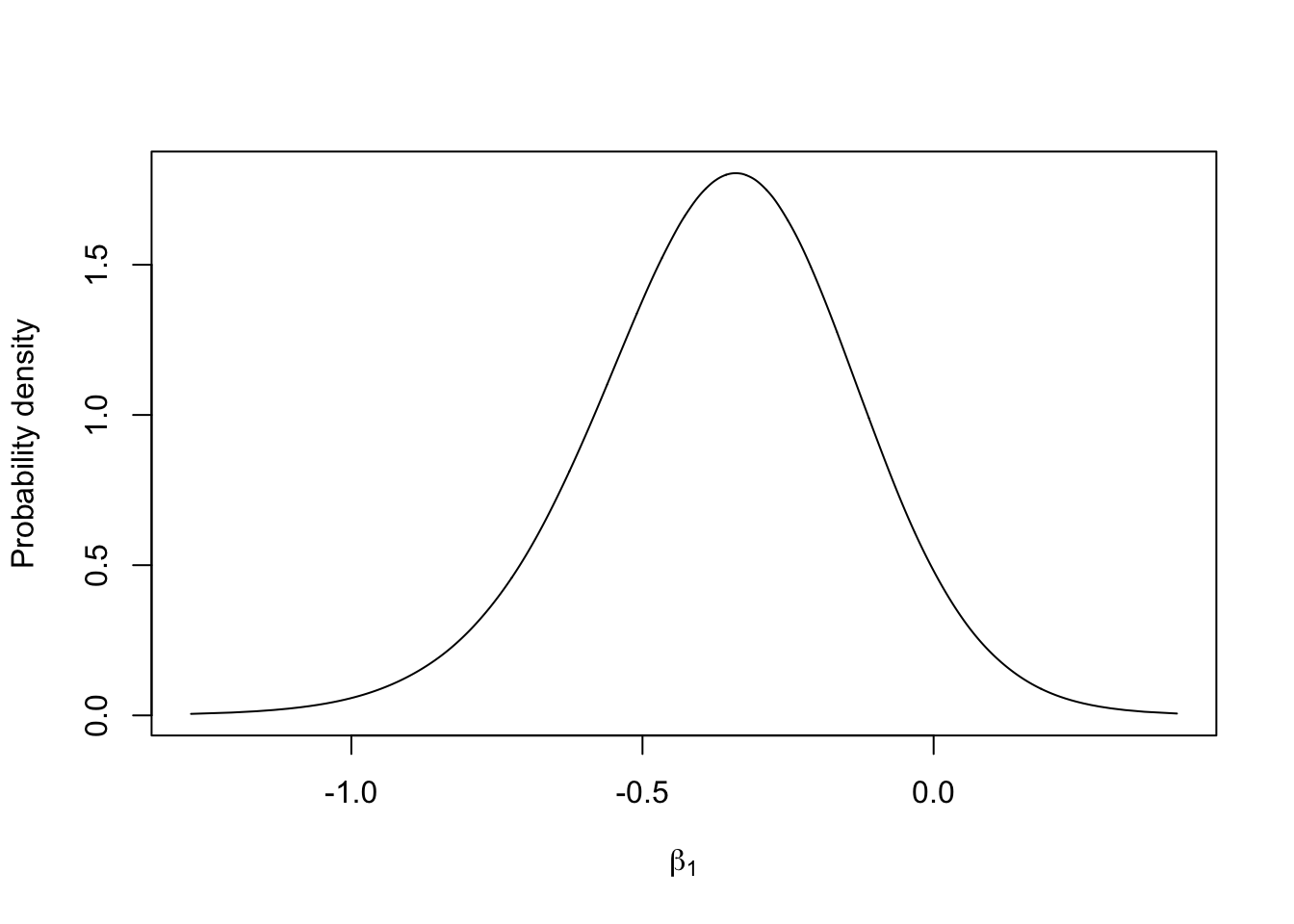

9 Plot the marginal of a fixed effect

m.beta1 = inla.tmarginal(fun = function(x) x, marginal =

res1$marginals.fixed$x1)

# - this transformation is the identity (does nothing)

# - m.beta1 is the marginal for the coefficient in front of the x1 covariate

plot(m.beta1, type="l", xlab = expression(beta[1]), ylab = "Probability density")

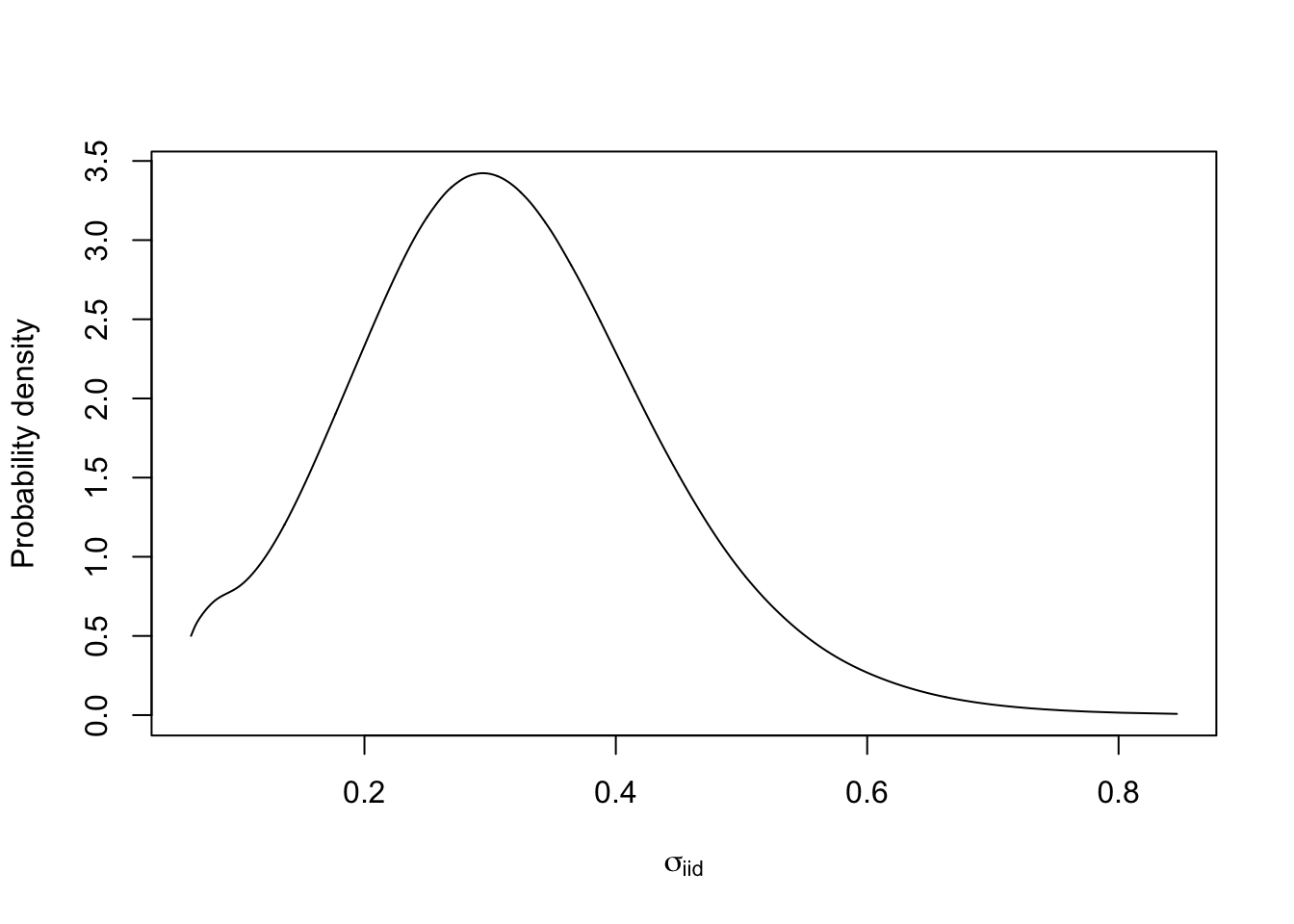

10 Plot the marginal of a hyper-parameter

We must transform the marginal

(res1$marginals.hyperpar$"Precision for plate") to a

parametrisation that makes sense to use for interpretation. The only

parametrisation I like is \(\sigma\),

marginal standard deviation. For numerical reasons, we need to transform

the internal marginals. To find define the function used in the

transformation, we look up the internal parametrisation, which is \(\log(precision)\), see

inla.doc("iid").

m.sigma = inla.tmarginal(fun = function(x) exp(-1/2*x), marginal =

res1$internal.marginals.hyperpar$`Log precision for plate`)

# - m.sigma is the marginal for the standard deviation parameter in the iid random effect

plot(m.sigma, type="l", xlab = expression(sigma[iid]), ylab = "Probability density")

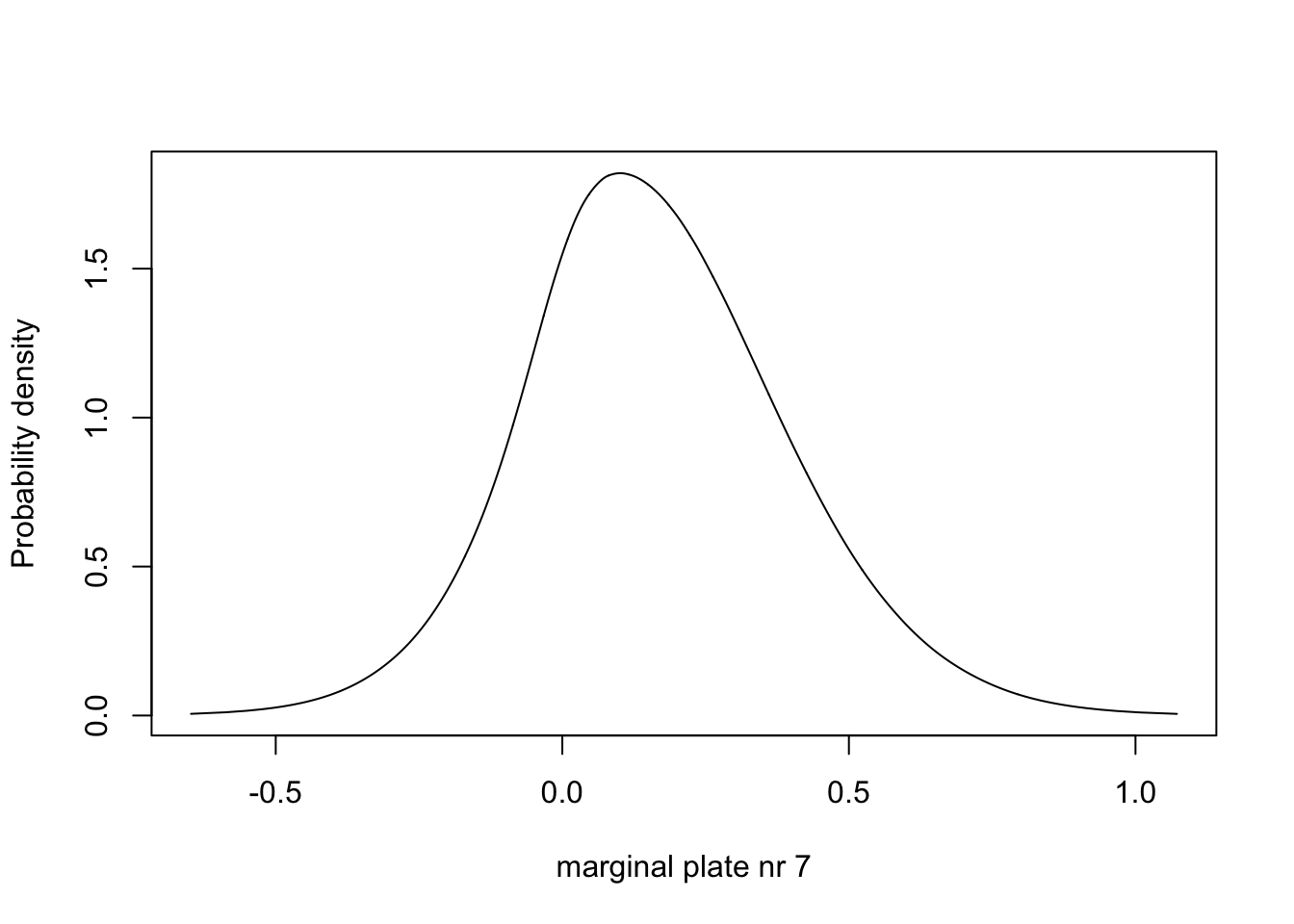

11 Plot the marginal of a parameter

m.plate.7 = inla.tmarginal(fun = function(x) x, marginal =

res1$marginals.random$plate$index.7)

# - m.plate.7 is one of the marginals for the parameters beloning to the plate iid effect

# - it is number 7, which corresponds to plate=7, which is our 7th row of data

plot(m.plate.7, type="l", xlab = "marginal plate nr 7", ylab = "Probability density")

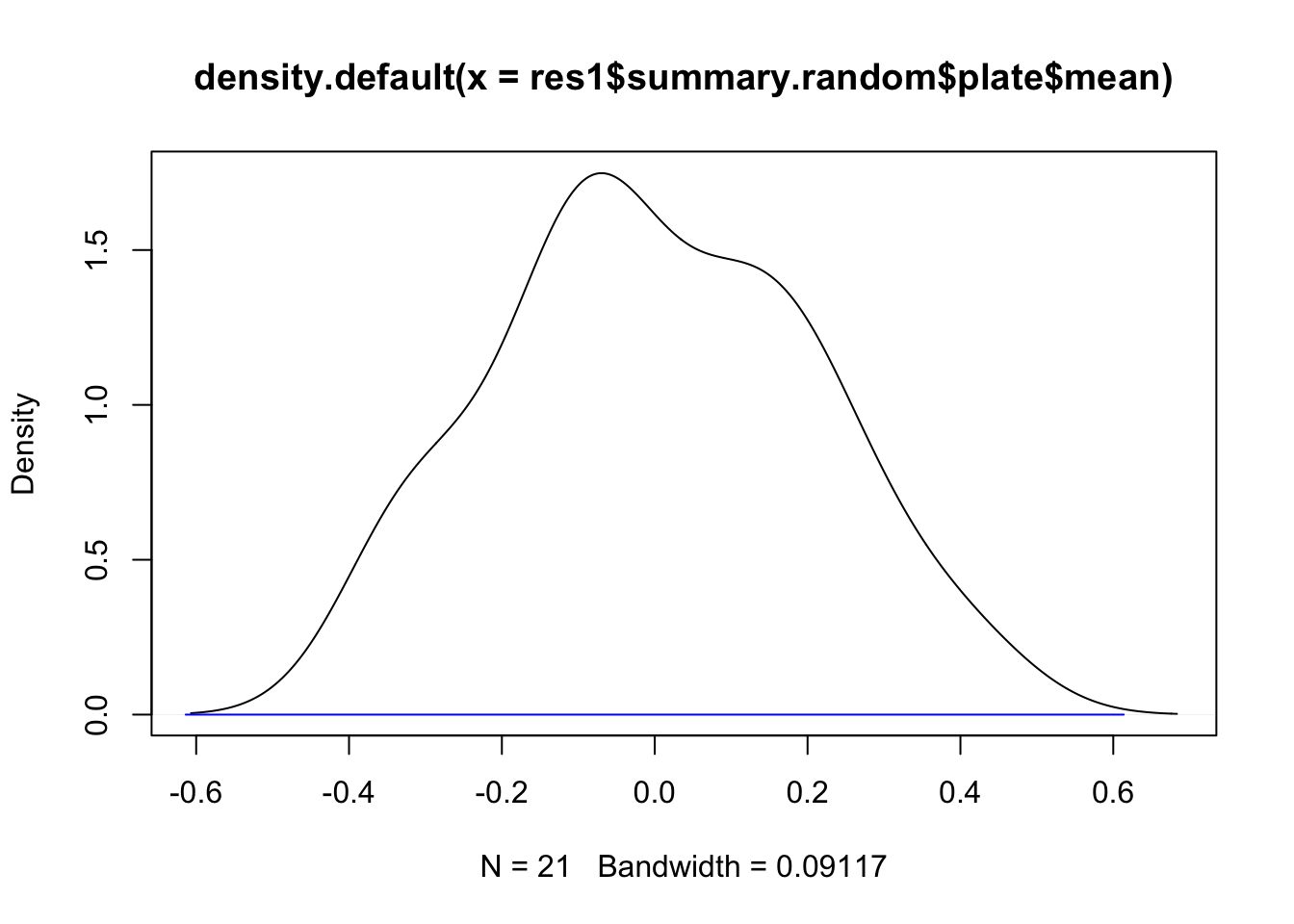

12 Plot the distribution of random effect estimates

Here we take the point estimates of the iid effect (posterior marginal medians).

plot(density(res1$summary.random$plate$mean))

lines(0+c(-2, 2)*res1$summary.hyperpar$`0.5quant`^(-0.5) , c(0,0), col="blue")

# - draw a blue line for plus/minus 2 sigmaFrom this plot we see that the estimates of the random effect give a nice distribution, ending up within two standard deviations from zero. The assumption that the random effect is iid Gaussian looks reasonable.

13 Predictions

What if we want to predict? On the same plate? On a new plate? With new covariate values? Here we provide one example.

df2 = rbind(df, c(NA, 1, 0, 0, 22))

tail(df2)## y Ntrials x1 x2 plate

## 17 3 12 1 1 17

## 18 22 41 1 1 18

## 19 15 30 1 1 19

## 20 32 51 1 1 20

## 21 3 7 1 1 21

## 22 NA 1 0 0 22res.pred = inla(formula=formula1, data=df2,

family=family1, Ntrials=Ntrials,

control.predictor = list(compute=T, link = 1),

# - to get the posterior of the predictor and fitted values

control.family=control.family1)res.pred$summary.fitted.values[22, ]## mean sd 0.025quant 0.5quant 0.97quant mode

## fitted.Predictor.22 0.41 0.091 0.24 0.4 0.6 0.4# - this is the inv.logit(eta_i), namely p_i14 Comparing two models

An alternative to using the logit link would be to use the probit link. Changing the likelihood in this way also changes the behaviour of the linear predictor.

control.family2 = list(control.link=list(model="probit"))

res1 = inla(formula=formula1, data=df,

family=family1, Ntrials=Ntrials,

control.predictor = list(compute=T, link=1),

# - to get the posterior of the predictor and fitted values

control.family=control.family1)

res2 = inla(formula=formula1, data=df,

family=family1, Ntrials=Ntrials,

control.predictor = list(compute=T, link=1),

# - to get the posterior of the predictor and fitted values

control.family=control.family2)a = data.frame(y=df$y, ps=df$y/df$Ntrials,

r1eta = res1$summary.linear.predictor$mean,

r2eta = res2$summary.linear.predictor$mean,

r1fit = res1$summary.fitted.values$mean,

r2fit = res2$summary.fitted.values$mean)

round(a, 2)## y ps r1eta r2eta r1fit r2fit

## 1 10 0.26 -0.69 -0.45 0.34 0.33

## 2 23 0.37 -0.47 -0.30 0.39 0.38

## 3 23 0.28 -0.72 -0.46 0.33 0.32

## 4 26 0.51 -0.16 -0.09 0.46 0.47

## 5 17 0.44 -0.33 -0.20 0.42 0.42

## 6 5 0.83 0.75 0.48 0.68 0.68

## 7 53 0.72 0.81 0.51 0.69 0.69

## 8 55 0.76 0.94 0.60 0.72 0.72

## 9 32 0.63 0.58 0.36 0.64 0.64

## 10 46 0.58 0.45 0.27 0.61 0.61

## 11 10 0.77 0.77 0.49 0.68 0.68

## 12 8 0.50 -0.53 -0.31 0.37 0.38

## 13 10 0.33 -0.73 -0.45 0.33 0.33

## 14 8 0.29 -0.81 -0.51 0.31 0.31

## 15 23 0.51 -0.34 -0.19 0.42 0.43

## 16 0 0.00 -0.89 -0.57 0.30 0.29

## 17 3 0.25 -0.03 -0.04 0.49 0.48

## 18 22 0.54 0.23 0.13 0.56 0.55

## 19 15 0.50 0.17 0.10 0.54 0.54

## 20 32 0.63 0.42 0.26 0.60 0.60

## 21 3 0.43 0.20 0.11 0.55 0.54

15 Comments

15.1 See also

Read the review paper (see Rue et al. 2016), and see the references therein! Also, explore www.r-inla.org, both the resources and the discussion group. And, use the function

inla.docinRto find information related to a keyword (e.g. a likelihood).15.2 More details on

dfin generalThis dataframe contains only numerical values, and all factors have been expanded. All covariates have a mean near zero and a standard deviation near 1 (i.e. 0.1<sd<10). They are also transformed to the appropriate scale, often with a

log.15.3 Mean, median or mode?

What point estimate should you use? Mean, median or mode?

The mode is usually the wrong answer. This is where the posterior is maximised, which would be similar to a penalised maximum likelihood. But, it is generally understood that the median and mean are better, both as they have nicer statistical properties, a more “valid” loss function, and experience shows that they are more stable and less error prone.

Choosing between the median and mean is more difficult. In most cases, the difference is very small, especially for “good” parametrisations. I personally prefer the median (0.5 quantile), for several reasons

You can transform (between parametrisations) “directly” (eg using

^(-0.5)as in the code),Estimates are independent of the internal choice of parameters (in the computer code).

I prefer the interpretation of the absolute loss function to that of a quadratic loss function for hyper-parameters.