Predictions with iid effects - binomial example

Haakon Bakka

BTopic112 updated 23. May 2017

1 About

In this topic we discuss how to do predictions with parts of the linear predictor. We use a general approach that should be applicable to all prediction scenarios.

The goal is to learn how to build up simulations for model fit and predictions. There are more convenient approaches if you only want to summarise or plot part of the posterior.

We recommend reading btopic102 first, as this explains the data and the model.

1.1 Initialise R session

We load any packages and set global options. You may need to install these libraries (Installation and general troubleshooting).

library(INLA)

rm(list=ls())

options(width=70, digits=2)

set.seed(2017)2 Load data, rename, rescale

data(Seeds)

df = data.frame(y = Seeds$r, Ntrials = Seeds$n, Seeds[, 3:5])3 Model

3.1 Observation Likelihood

family1 = "binomial"

control.family1 = list(control.link=list(model="logit"))

# number of trials is df$Ntrials3.2 Formula

hyper1 = list(theta = list(prior="pc.prec", param=c(1,0.01)))

formula1 = y ~ x1 + x2 + f(plate, model="iid", hyper=hyper1)4 Call INLA

res1 = inla(formula=formula1, data=df,

family=family1, Ntrials=Ntrials,

control.family=control.family1,

control.predictor=list(compute=T),

control.compute=list(config=T))4.1 Look at results

summary(res1)##

## Call:

## c("inla.core(formula = formula, family = family, contrasts =

## contrasts, ", " data = data, quantiles = quantiles, E = E,

## offset = offset, ", " scale = scale, weights = weights,

## Ntrials = Ntrials, strata = strata, ", " lp.scale = lp.scale,

## link.covariates = link.covariates, verbose = verbose, ", "

## lincomb = lincomb, selection = selection, control.compute =

## control.compute, ", " control.predictor = control.predictor,

## control.family = control.family, ", " control.inla =

## control.inla, control.fixed = control.fixed, ", " control.mode

## = control.mode, control.expert = control.expert, ", "

## control.hazard = control.hazard, control.lincomb =

## control.lincomb, ", " control.update = control.update,

## control.lp.scale = control.lp.scale, ", " control.pardiso =

## control.pardiso, only.hyperparam = only.hyperparam, ", "

## inla.call = inla.call, inla.arg = inla.arg, num.threads =

## num.threads, ", " blas.num.threads = blas.num.threads, keep =

## keep, working.directory = working.directory, ", " silent =

## silent, inla.mode = inla.mode, safe = FALSE, debug = debug, ",

## " .parent.frame = .parent.frame)")

## Time used:

## Pre = 2.99, Running = 0.533, Post = 0.0534, Total = 3.57

## Fixed effects:

## mean sd 0.025quant 0.5quant 0.97quant mode kld

## (Intercept) -0.39 0.18 -0.74 -0.39 -0.031 -0.40 0

## x1 -0.36 0.23 -0.84 -0.35 0.059 -0.34 0

## x2 1.03 0.22 0.59 1.03 1.452 1.04 0

##

## Random effects:

## Name Model

## plate IID model

##

## Model hyperparameters:

## mean sd 0.025quant 0.5quant 0.97quant mode

## Precision for plate 21.39 41.32 2.96 10.62 88.69 6.06

##

## Marginal log-Likelihood: -68.44

## is computed

## Posterior summaries for the linear predictor and the fitted values are computed

## (Posterior marginals needs also 'control.compute=list(return.marginals.predictor=TRUE)')5 Draw posterior samples

n.samples = 1000

samples = inla.posterior.sample(n.samples, result = res1)This function draws posterior samples from the linear predictor and all its components. The samples from the hyper-parameters are only samples from the integration grid (which represents the only hyper-parameter values to compute the posterior of all the latent variables).

5.1 The structure of the samples

The samples is a list of posterior samples, let us look

at the first sample.

str(samples[[1]])## List of 3

## $ hyperpar: Named num 1.75

## ..- attr(*, "names")= chr "Precision for plate"

## $ latent : num [1:45, 1] -0.458 -0.523 -0.83 0.562 -0.367 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : chr [1:45] "Predictor:1" "Predictor:2" "Predictor:3" "Predictor:4" ...

## .. ..$ : NULL

## $ logdens :List of 3

## ..$ hyperpar: num -4.76

## ..$ latent : num -10.7

## ..$ joint : num -15.5Here, $hyperpar is the part of the first sample

representing the hyper-parameters, $latent represents all

the latent variables, and $logdens is the prior and

posterior log probability densities. The $latent, however,

is quite complicated.

t(samples[[1]]$latent)## Predictor:1 Predictor:2 Predictor:3 Predictor:4 Predictor:5

## [1,] -0.46 -0.52 -0.83 0.56 -0.37

## Predictor:6 Predictor:7 Predictor:8 Predictor:9 Predictor:10

## [1,] 0.9 0.55 1.3 0.48 0.46

## Predictor:11 Predictor:12 Predictor:13 Predictor:14 Predictor:15

## [1,] 1.5 0.74 -0.9 -1.1 0.55

## Predictor:16 Predictor:17 Predictor:18 Predictor:19 Predictor:20

## [1,] -2.1 -0.65 0.55 0.19 -0.04

## Predictor:21 plate:1 plate:2 plate:3 plate:4 plate:5 plate:6

## [1,] 0.56 -0.29 -0.36 -0.67 0.73 -0.2 0.072

## plate:7 plate:8 plate:9 plate:10 plate:11 plate:12 plate:13

## [1,] -0.28 0.49 -0.34 -0.36 0.69 1.5 -0.14

## plate:14 plate:15 plate:16 plate:17 plate:18 plate:19 plate:20

## [1,] -0.38 1.3 -1.3 -0.88 0.32 -0.045 -0.27

## plate:21 (Intercept):1 x1:1 x2:1

## [1,] 0.33 -0.16 -0.59 0.99# - transposed for shorter printing

# - this is a column vectorHere, the names show which part of the latent field these sample

values are from. First comes the Predictor, wich is \(\eta\) the linear predictor, then comes the

random effect named plate, with its values at each plate

level. Then comes the fixed effects (one of them is called

(Intercept)).

5.2 How to access the

right part of $latent

The difficulty now is making generic code that will work for very complex models. E.g. in a spatial model, it would be horrific to have to read all the names and figure which was which.

I will now create a generic code though the use of a hidden part of the INLA result object.

res1$misc$configs$contents## $tag

## [1] "Predictor" "plate" "(Intercept)" "x1"

## [5] "x2"

##

## $start

## [1] 1 22 43 44 45

##

## $length

## [1] 21 21 1 1 1Here, you see the names of the latent components, and their start

index and their length. Using this, we can find the indices for

plate in the following way.

contents = res1$misc$configs$contents

effect = "plate"

id.effect = which(contents$tag==effect)

# - the numerical id of the effect

ind.effect = contents$start[id.effect]-1 + (1:contents$length[id.effect])

# - all the indices for the effect

# - these are the indexes in the sample[[1]]$latent !The first sample from plate, and all the samples from

plate can then be found.

## See an example for the first sample

samples[[1]]$latent[ind.effect, , drop=F]## [,1]

## plate:1 -0.294

## plate:2 -0.358

## plate:3 -0.666

## plate:4 0.727

## plate:5 -0.202

## plate:6 0.072

## plate:7 -0.276

## plate:8 0.490

## plate:9 -0.343

## plate:10 -0.362

## plate:11 0.689

## plate:12 1.500

## plate:13 -0.136

## plate:14 -0.377

## plate:15 1.309

## plate:16 -1.328

## plate:17 -0.884

## plate:18 0.317

## plate:19 -0.045

## plate:20 -0.272

## plate:21 0.326## Draw this part of every sample

samples.effect = lapply(samples, function(x) x$latent[ind.effect])5.3 Matrix output

We create a more readable version of these samples.

s.eff = matrix(unlist(samples.effect), byrow = T, nrow = length(samples.effect))

# - s.eff means samples.effect, just as a matrix

colnames(s.eff) = rownames(samples[[1]]$latent)[ind.effect]

# - retrieve names from original object

summary(s.eff)## plate:1 plate:2 plate:3 plate:4

## Min. :-1.31 Min. :-0.89 Min. :-1.13 Min. :-0.54

## 1st Qu.:-0.50 1st Qu.:-0.22 1st Qu.:-0.50 1st Qu.: 0.05

## Median :-0.29 Median :-0.08 Median :-0.33 Median : 0.22

## Mean :-0.32 Mean :-0.09 Mean :-0.33 Mean : 0.23

## 3rd Qu.:-0.12 3rd Qu.: 0.05 3rd Qu.:-0.16 3rd Qu.: 0.39

## Max. : 0.37 Max. : 0.83 Max. : 0.41 Max. : 1.20

## plate:5 plate:6 plate:7 plate:8

## Min. :-0.96 Min. :-1.32 Min. :-0.54 Min. :-0.55

## 1st Qu.:-0.09 1st Qu.:-0.09 1st Qu.: 0.02 1st Qu.: 0.14

## Median : 0.06 Median : 0.09 Median : 0.15 Median : 0.30

## Mean : 0.06 Mean : 0.12 Mean : 0.17 Mean : 0.31

## 3rd Qu.: 0.23 3rd Qu.: 0.32 3rd Qu.: 0.32 3rd Qu.: 0.48

## Max. : 1.08 Max. : 1.53 Max. : 1.09 Max. : 1.48

## plate:9 plate:10 plate:11 plate:12

## Min. :-0.88 Min. :-1.10 Min. :-0.80 Min. :-0.87

## 1st Qu.:-0.22 1st Qu.:-0.35 1st Qu.:-0.07 1st Qu.: 0.04

## Median :-0.05 Median :-0.19 Median : 0.10 Median : 0.21

## Mean :-0.06 Mean :-0.20 Mean : 0.13 Mean : 0.24

## 3rd Qu.: 0.08 3rd Qu.:-0.03 3rd Qu.: 0.31 3rd Qu.: 0.42

## Max. : 0.75 Max. : 0.61 Max. : 1.54 Max. : 1.50

## plate:13 plate:14 plate:15 plate:16

## Min. :-1.00 Min. :-0.97 Min. :-0.40 Min. :-1.77

## 1st Qu.:-0.14 1st Qu.:-0.26 1st Qu.: 0.20 1st Qu.:-0.34

## Median : 0.04 Median :-0.08 Median : 0.38 Median :-0.12

## Mean : 0.03 Mean :-0.08 Mean : 0.42 Mean :-0.15

## 3rd Qu.: 0.19 3rd Qu.: 0.08 3rd Qu.: 0.62 3rd Qu.: 0.07

## Max. : 0.97 Max. : 0.70 Max. : 1.50 Max. : 0.98

## plate:17 plate:18 plate:19 plate:20

## Min. :-1.56 Min. :-1.00 Min. :-1.21 Min. :-0.58

## 1st Qu.:-0.51 1st Qu.:-0.21 1st Qu.:-0.27 1st Qu.:-0.03

## Median :-0.28 Median :-0.04 Median :-0.11 Median : 0.12

## Mean :-0.31 Mean :-0.06 Mean :-0.11 Mean : 0.14

## 3rd Qu.:-0.08 3rd Qu.: 0.10 3rd Qu.: 0.05 3rd Qu.: 0.30

## Max. : 0.54 Max. : 0.81 Max. : 0.83 Max. : 1.32

## plate:21

## Min. :-1.35

## 1st Qu.:-0.27

## Median :-0.08

## Mean :-0.09

## 3rd Qu.: 0.11

## Max. : 1.025.4 Verifying output

We compare these samples to the usual posterior summaries.

cbind(colMeans(s.eff), res1$summary.random$plate$mean)## [,1] [,2]

## plate:1 -0.322 -0.309

## plate:2 -0.085 -0.087

## plate:3 -0.335 -0.333

## plate:4 0.235 0.227

## plate:5 0.065 0.056

## plate:6 0.117 0.111

## plate:7 0.166 0.167

## plate:8 0.311 0.300

## plate:9 -0.065 -0.063

## plate:10 -0.197 -0.195

## plate:11 0.126 0.128

## plate:12 0.237 0.222

## plate:13 0.032 0.021

## plate:14 -0.080 -0.065

## plate:15 0.420 0.410

## plate:16 -0.149 -0.141

## plate:17 -0.312 -0.316

## plate:18 -0.057 -0.060

## plate:19 -0.107 -0.114

## plate:20 0.144 0.135

## plate:21 -0.086 -0.0916 Prediction where we have data

We want to compute a prediction where we already have a datapoint. The goal is to predict for observation 7, which is:

df[7, ]## y Ntrials x1 x2 plate

## 7 53 74 0 1 76.1 Using the linear predictor

The easiest approach is to use the linear predictor. Since this is

the 7th row of df, we pick out the 7th predictor. Noe: The

value of plate is not important, that is just a

covariate value (which happens to be the same as the rown number). The

linear predictor always start at the first element of

$latent, so we do not need to look for it.

## see one example value

samples[[1]]$latent[7, , drop=F]## [,1]

## Predictor:7 0.55## Draw this part of every sample

samples.pred7 = lapply(samples, function(x) x$latent[7])

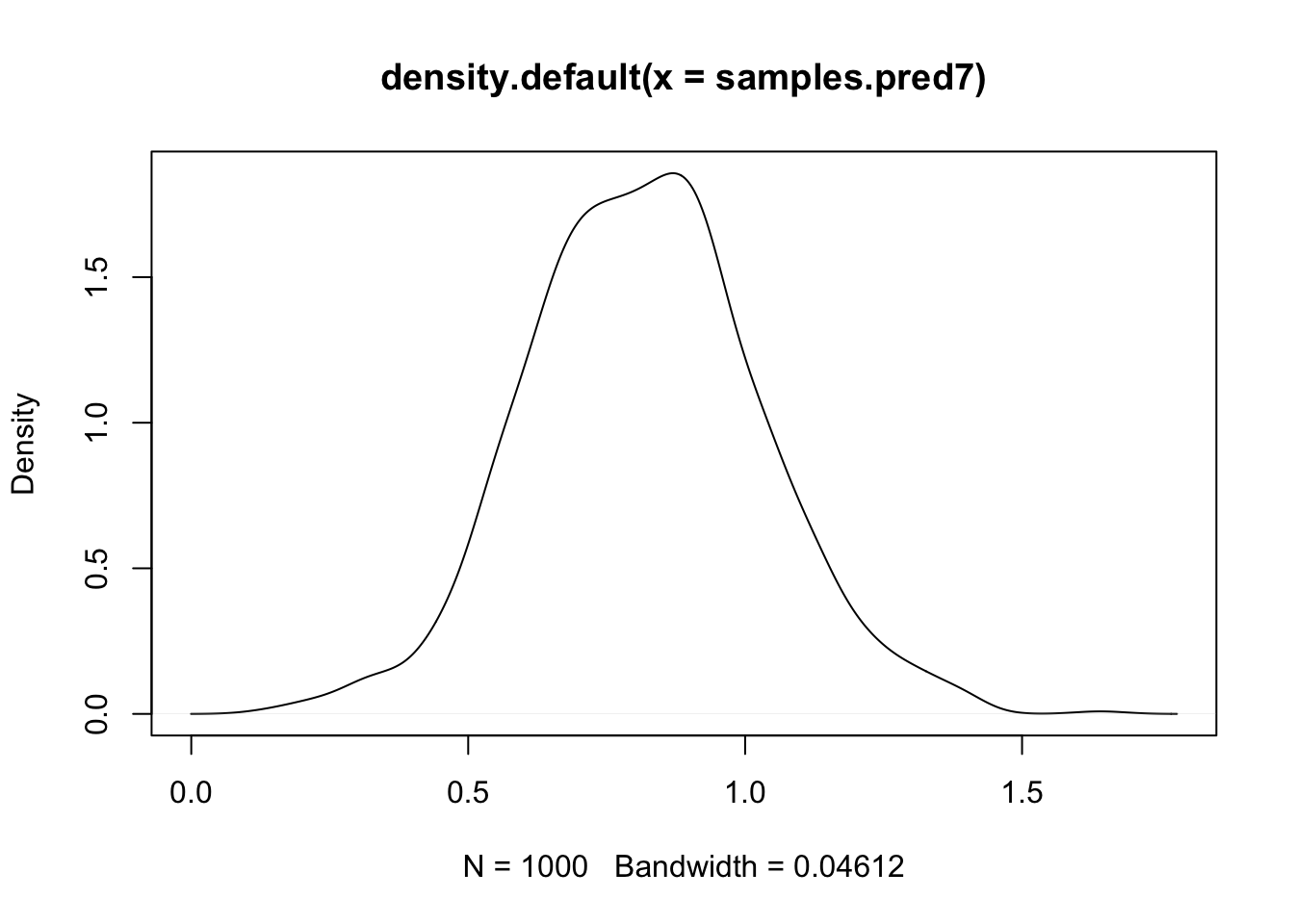

samples.pred7 = unlist(samples.pred7)Plot a density of this sample. You could also do a histogram with

truehist.

plot(density(samples.pred7))

abline(v=df$y[7])

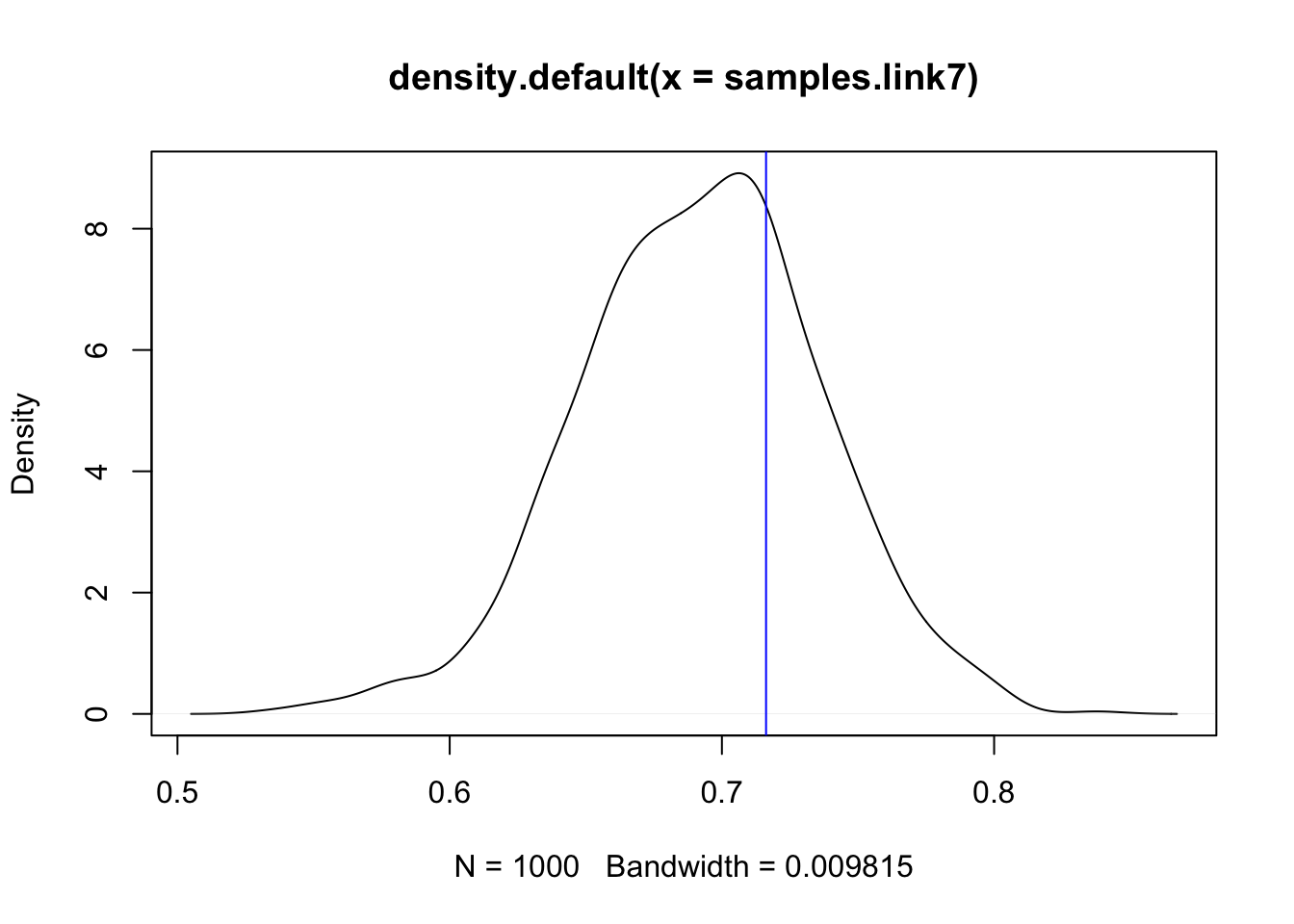

6.2 Transforming the predictor samples to data-value samples

We now need to transform through the link function and the data sampling. We know we use the logit transform, but we can doublecheck this:

res1$.args$control.family$control.link$model## NULLYou can set up the logit function yourself, or use

inla.link.invlogit(x). We transform the samples through the

link.

samples.link7 = inla.link.invlogit(samples.pred7)

plot(density(samples.link7))

## Add the naive estimate of binomial probability y/N:

abline(v = df$y[7]/df$Ntrials[7], col="blue")

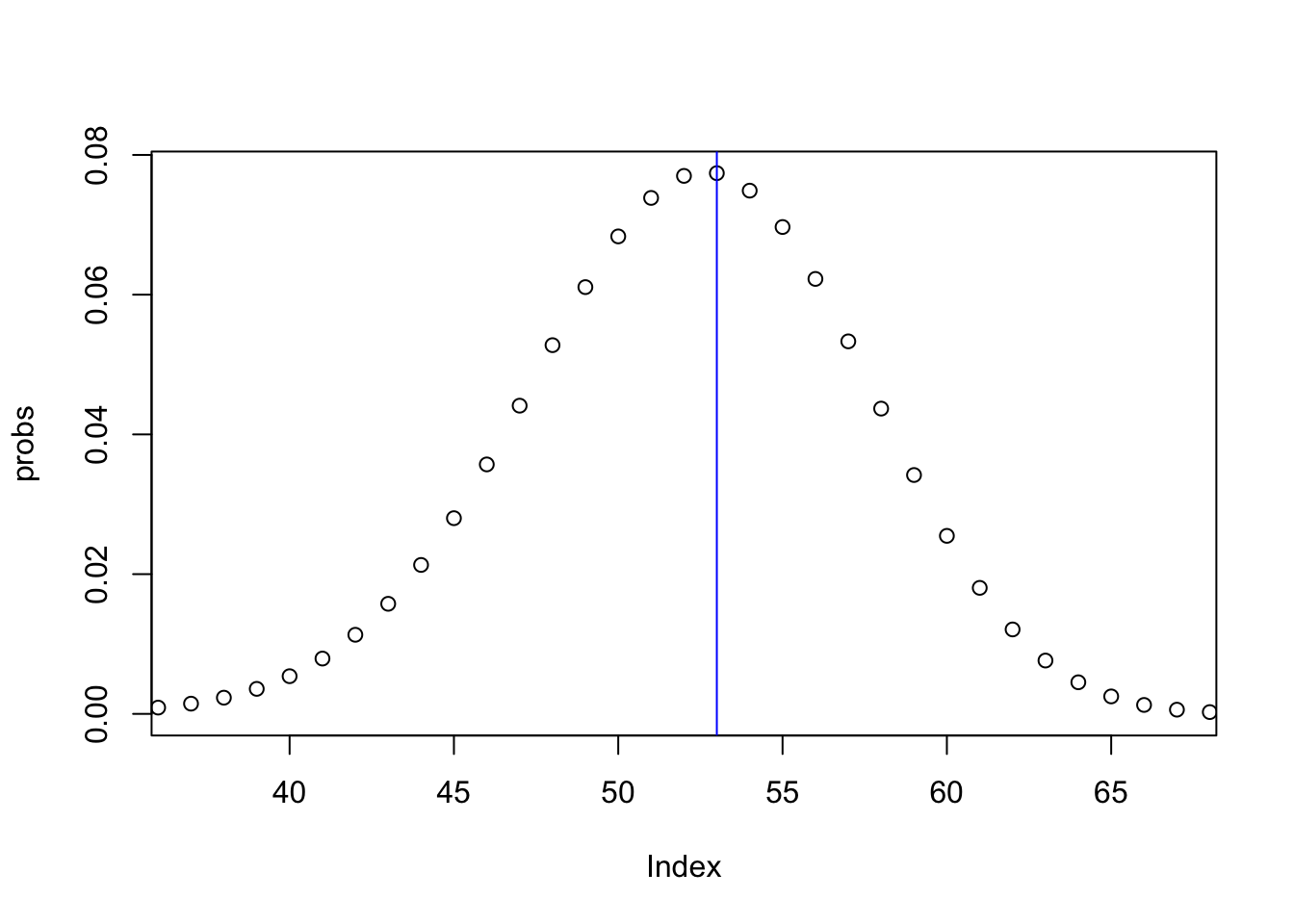

6.3 Adding observation likelihood randomness

The following code is specific to discrete likelihoods, as we can compute all the densities exactly. What we do here is discrete integration, computing the probabilities exactly (on the samples that we have).

## What range of observations do we want to compute

discrete.range = 0:100

## Initialize the probabilities

probs = rep(0, length(discrete.range))

for (i in 1:length(samples.link7)) {

probs = probs + dbinom(discrete.range, size=df$Ntrials[7], prob = samples.link7[i])

}

probs = probs / length(samples)

names(probs) = discrete.rangeLet us now plot the results, together with the true value.

plot(probs, xlim=c(37, 67))

abline(v=df$y[7], col="blue")

6.4 Alternative way: Building up the samples model component by model component

It is important to know how to build up the (linear) predictor sample by adding together the model components (fixed effects and random effects), as this enables you to do anything! Knowing how to do this lets us predict in unsampled locations also.

## Remember the covariate values

df[7, ]## y Ntrials x1 x2 plate

## 7 53 74 0 1 7## Remember the formula

res1$.args$formula## y ~ x1 + x2 + f(plate, model = "iid", hyper = hyper1)

## NULLFor sample nr 57 (as an example) we add it all up:

nr = 57

s = samples[[nr]]$latent

## beta1 * 0

f.x1.0 = s[44, , drop=F] * 0

## beta1 * 1

f.x2.1 = s[45, , drop=F] * 1

## f(plate = 7)

f.plate.7 = s[28, , drop=F]

## The intercept

int = s[43, , drop=F]

sum = drop(f.x1.0 + f.x2.1 + f.plate.7 + int)

list(model.component.sum.7 = sum, linear.predictor.7 = samples.pred7[nr])## $model.component.sum.7

## x1:1

## 0.77

##

## $linear.predictor.7

## [1] 0.77These two numbers are equal, or approximately equal, as they

represent the exact same thing! To do this for all the sample numbers,

create a for loop for (nr in 1:length(samples)).

7 Predicting for a different experiment on an old plate

Using a similar method to what we just did, we can create a new experiment, and predict it.

our.experiment = list(x1 = 0, x2 = 0, plate = 7)

nr = 57

s = samples[[nr]]$latent

## beta1 * 0

f.x1.0 = s[44, , drop=F] * our.experiment$x1

## beta1 * 1

f.x2.1 = s[45, , drop=F] * our.experiment$x2

## f(plate = 7)

# - the same plate as before

f.plate.7 = s[28, , drop=F]

## The intercept

int = s[43, , drop=F]

predictor.our.experiment = drop(f.x1.0 + f.x2.1 + f.plate.7 + int)Again, to get all the samples, create a for loop. Then go through the link and the likelihood as we did in the predictor example.

8 Predicting for a different experiment on a new plate

Now, we cannot use the sample from plate number 7. We want a new plate, let us call it plate number 50. Indeed, the result of our INLA model does not include a plate number 50.

Even though there is no information in our data about what happens at plate number 50, there is information about what happens on plates “in general”. This information lies in the pooling parameter sigma/precision for plate. Let us find our sample for this sigma/precision.

## As before:

our.experiment = list(x1 = 0, x2 = 0, plate = 50)

nr = 57

## Want a hyper-parameter

plate.precision = samples[[nr]]$hyperpar[1]

plate.sigma = plate.precision^-0.5

names(plate.sigma) = "sigma"

print(plate.sigma)## sigma

## 0.59Our model for plate was a Gaussian IID effect, with standard deviation sigma. So, we can draw a sample ourselves for this new plate:

sample.plate50 = rnorm(1, sd=plate.sigma)And then we can complete our sample number nr:

## As before

s = samples[[nr]]$latent

f.x1.0 = s[44, , drop=F] * our.experiment$x1

f.x2.1 = s[45, , drop=F] * our.experiment$x2

## f(plate = 50)

f.plate.50 = sample.plate50

## The intercept

int = s[43, , drop=F]

predictor.experiment.newplate = drop(f.x1.0 + f.x2.1 + f.plate.50 + int)A for-loop is needed to produce all the samples. We also need transformation through the link and adding the likelihood.

9 Comments